Segmentation using Unet with Keras and Jupyter Notebook

The implementation of biomedical image segmentation with the use of U-Net model with Keras and Jupyter Notebook. The architecture was inspired by U-Net: Convolutional Networks for Biomedical Image Segmentation. And I mainly referred to the images and codes of these github: zhixuhao github and ugent-korea github

Abstract

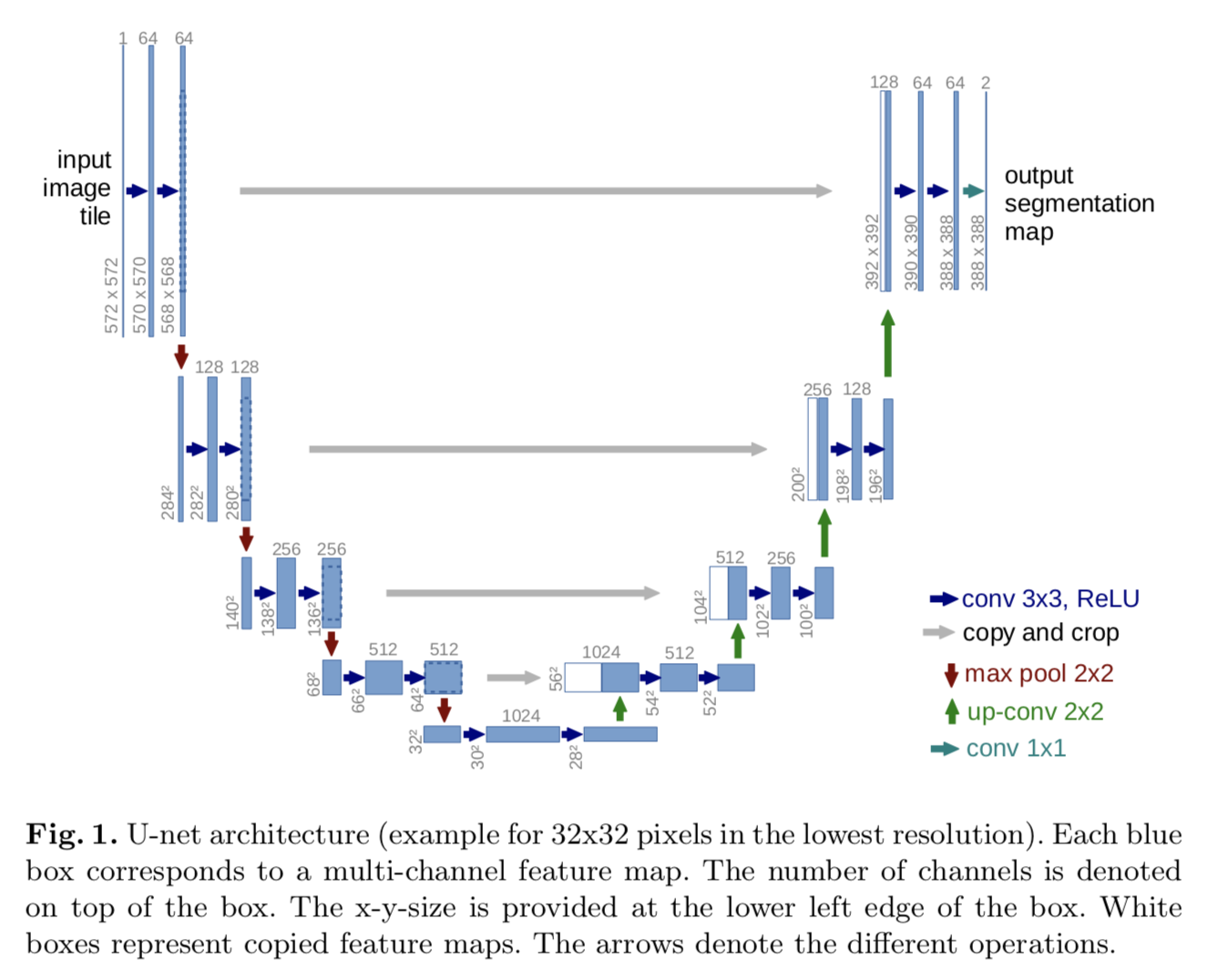

The author of paper propose a simple and effective end-to-end image segmentation network architecture for medical images. The proposed network, called U-net, has main three factors for well-training.

- U-shaped network structure with two configurations: Contracting and Expanding path

- Training more faster than sliding-windows: Patch units and Overlap-tile

- Data augmentation: Elastic deformation and Weight cross entropy

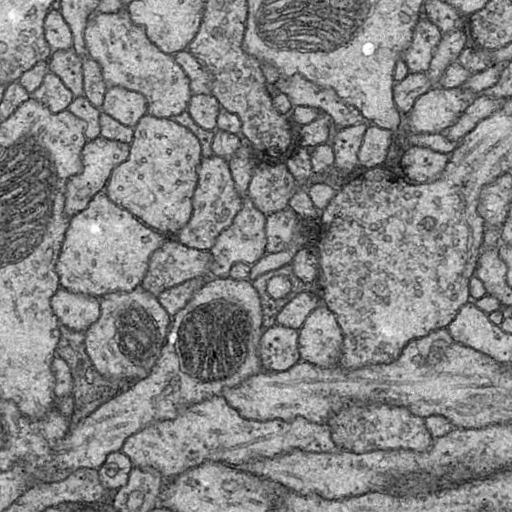

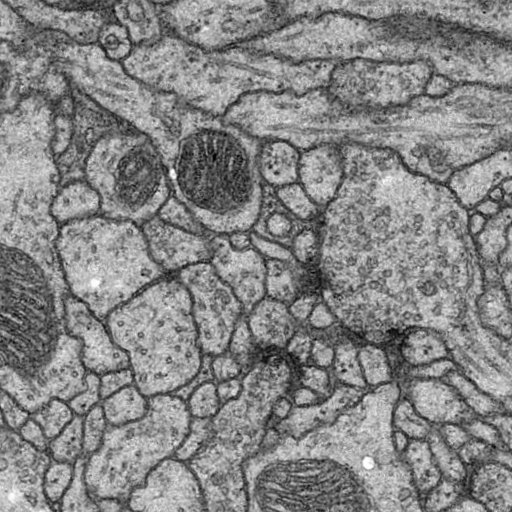

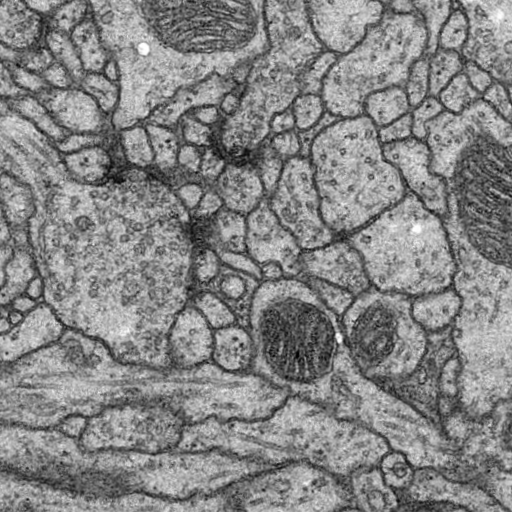

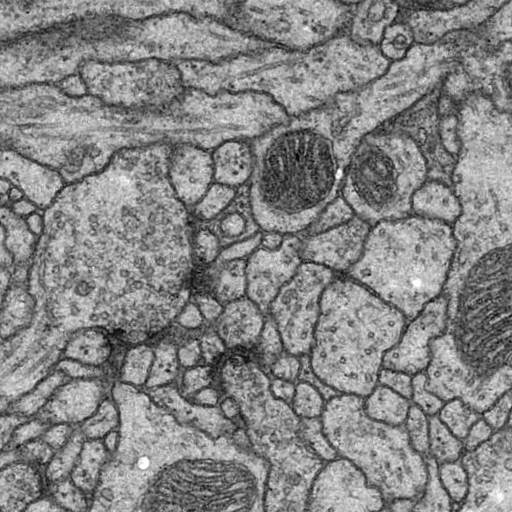

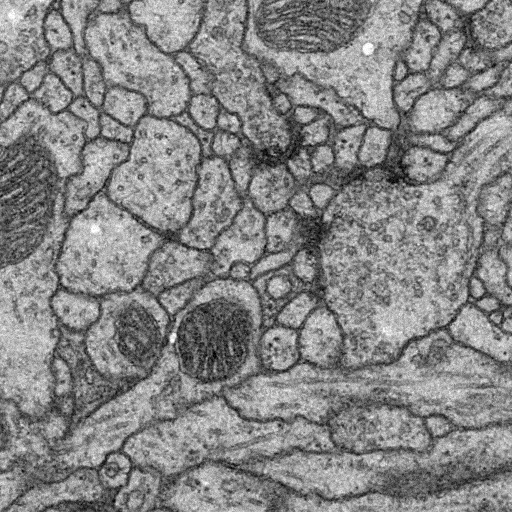

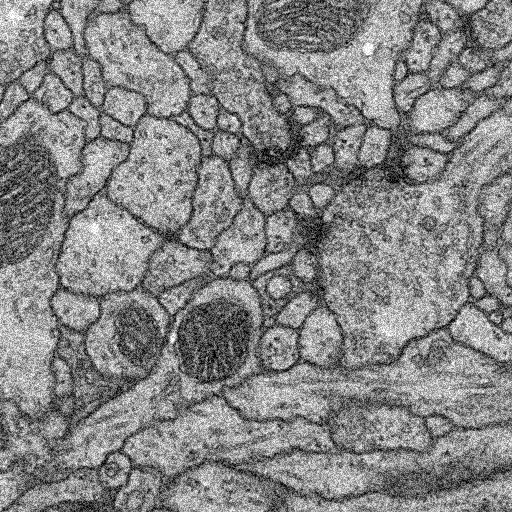

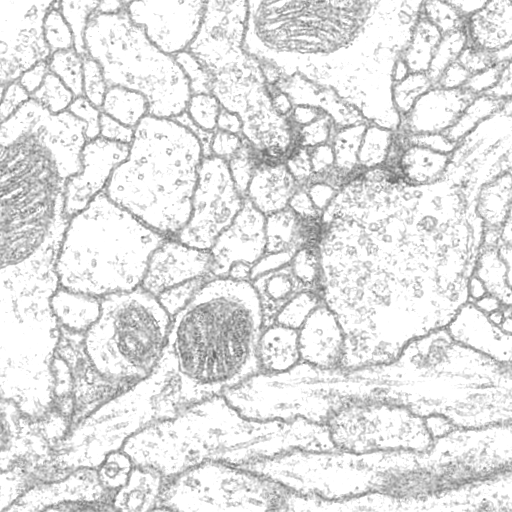

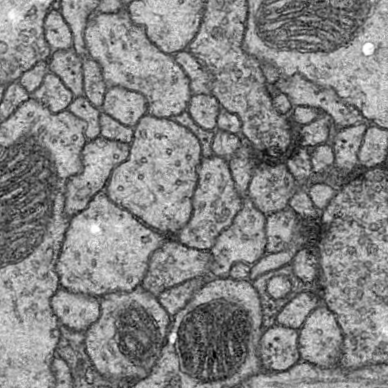

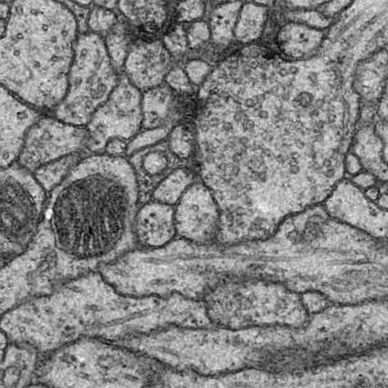

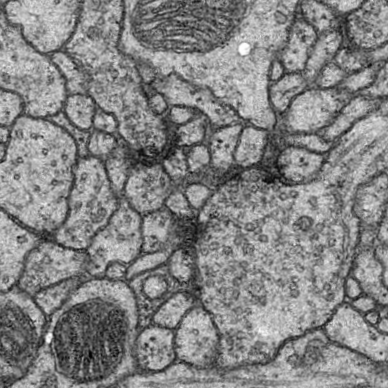

Dataset

The dataset we used is Transmission Electron Microscopy (ssTEM) data set of the Drosophila first instar larva ventral nerve cord (VNC), which is dowloaded from ISBI Challenge: Segmentation of of neural structures in EM stacks

- Black and white segmentation of membrane and cell with EM(Electron Microscopic) image.

- The data set is a large size of image and few so the data augmentation is needed.

- The data set contains 30 images of size 512x512 for the train, train-labels and test.

- There is no images for test-labels for the ISBI competition.

- If you want to get the evaluation metrics of competition, you should split part of the train data set for testing.

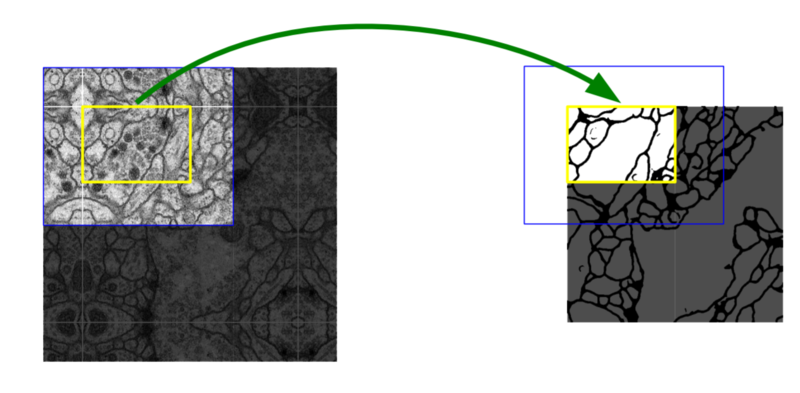

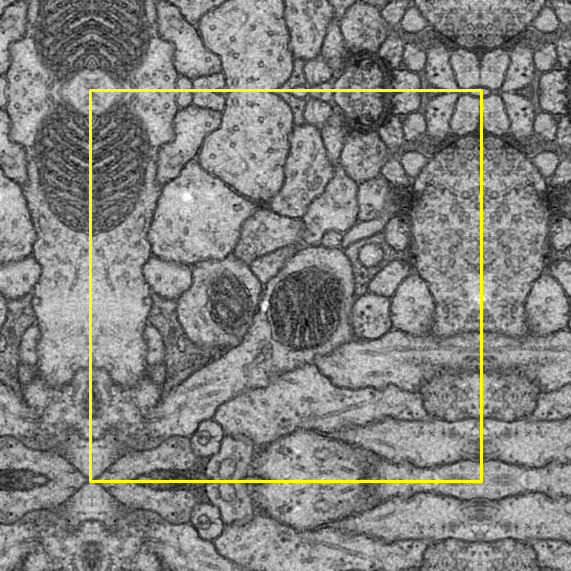

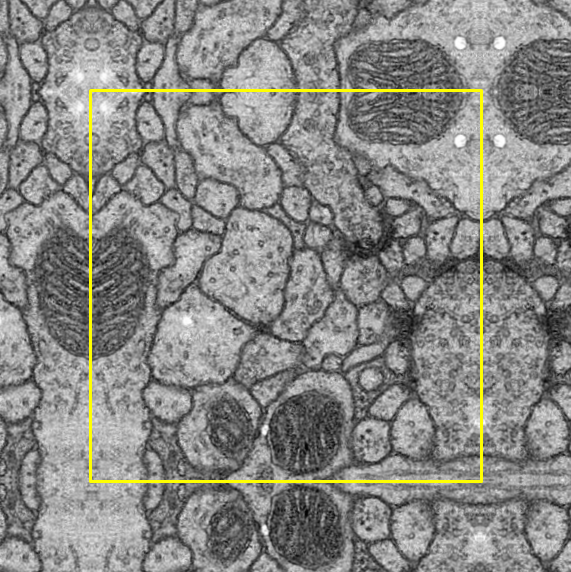

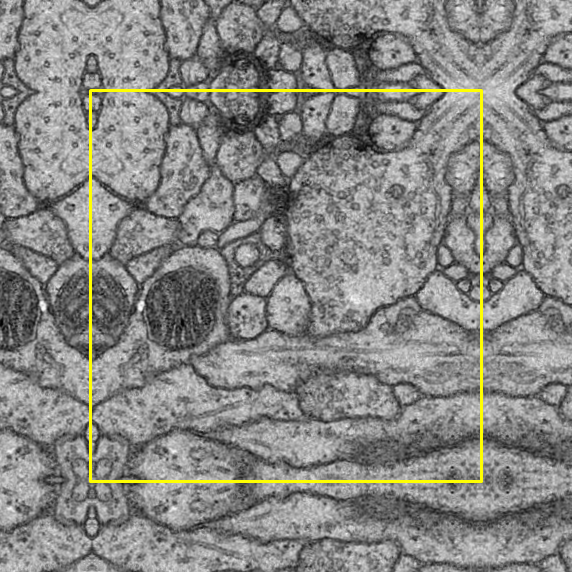

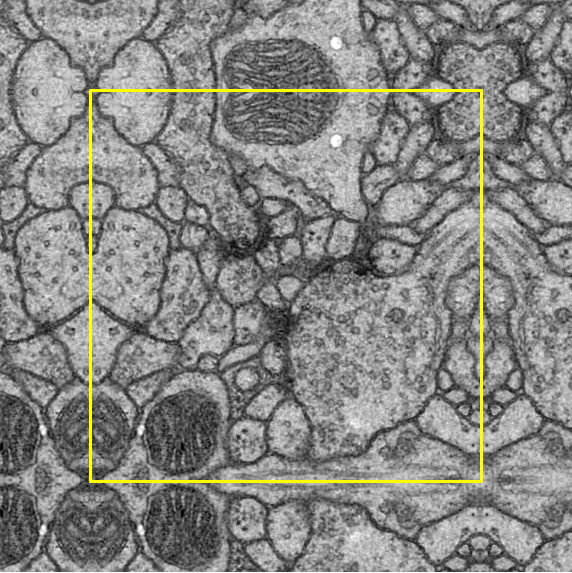

Overlap-tile

- Patch method has low overlap ratio so that the speed of detection can be improvement.

- However, as the wide size of patch detect image at once, the performance of context is good but the performance of localization is lower.

- In this paper, the U-net architecture and overlap-tile methods were proposed to solve this localization problem.

Simple. Because the EM image is large, sometimes the model of detection input is larger than the patch size (yellow). If so, mirror and fill in the patch area with the empty part.

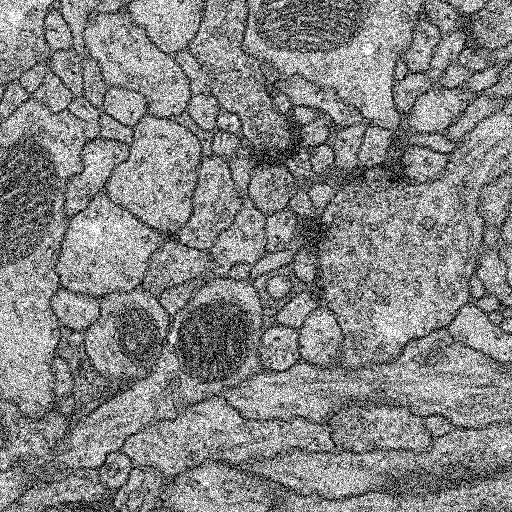

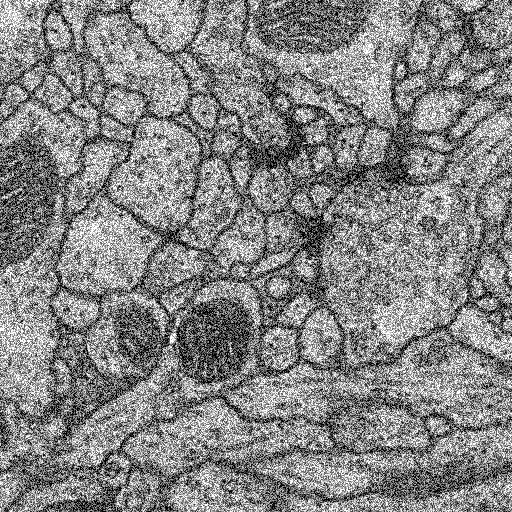

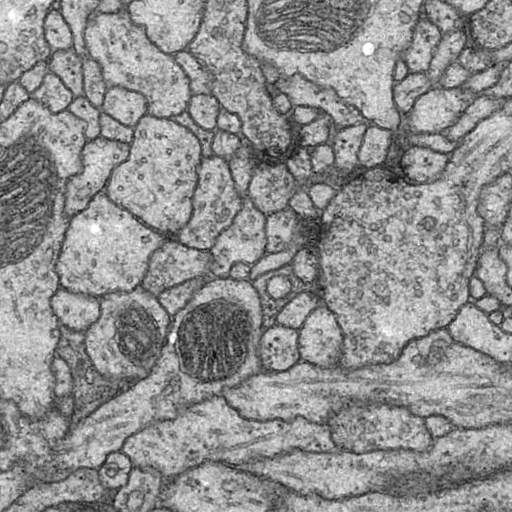

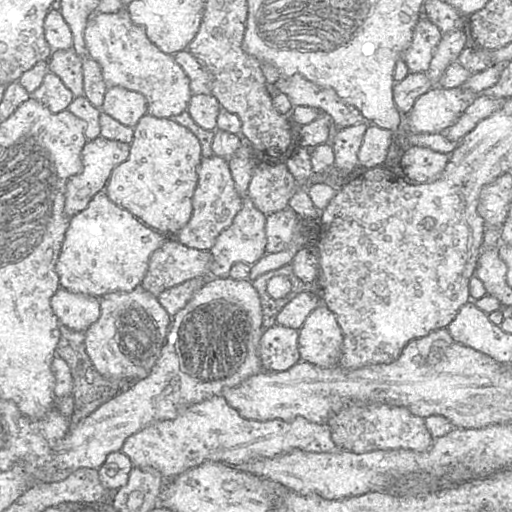

Data Augmenation

We preprocessed the images for data augmentation. Following preprocessing are :

- Flip

- Gaussian noise

- Uniform noise

- Brightness

- Elastic deformation

- Crop

- Pad

You can easily to understand refer this page

Original Image

| Image | |||

| Flip |  Vertical |

Horizontal |

Both |

| Gaussian noise |  Standard Deviation: 10 |

Standard Deviation: 50 |

Standard Deviation: 100 |

| Uniform noise |  Intensity: 10 |

Intensity: 50 |

Intensity: 100 |

| Brightness |  Intensity: 10 |

Intensity: 20 |

Intensity: 30 |

| Elastic deformation |  Random Deformation: 1 |

Random Deformation: 2 |

Random Deformation: 3 |

Crop and Pad

| Crop | |||

Left Bottom |

Left Top |

Right Bottom |

Right Top |

Padding process is compulsory after the cropping process as the image has to fit the input size of the U-Net model.

In terms of the padding method, symmetric padding was done in which the pad is the reflection of the vector mirrored along the edge of the array. We selected the symmetric padding over several other padding options because it reduces the loss the most.

To help with observation, a yellow border is added around the original image: outside the border indicates symmetric padding whereas inside indicates the original image.

| Pad | |||

Left Bottom |

Left Top |

Right bottom |

Right Top |

Network Architecture

Contracting Path (Fully Convolution)

- Typical convolutional network.

- 3x3 convolution layer with max-pooling and drop out

- Extracts the image feature accurately, but reduces the size of the image feature map.

Expanding Path (Deconvolution)

- Output segmentation map by upsampling the feature map

- 2x2 up-convolution and 3x3 convolution layer with concatenation

- The disadvantage of upsampling process is that the localization information in the image feature map will be lost.

- Therefore, localization information less lost by concatenating the feature map after up-conv with the same level feature map.

- Last one is 1x1 convolution mapping

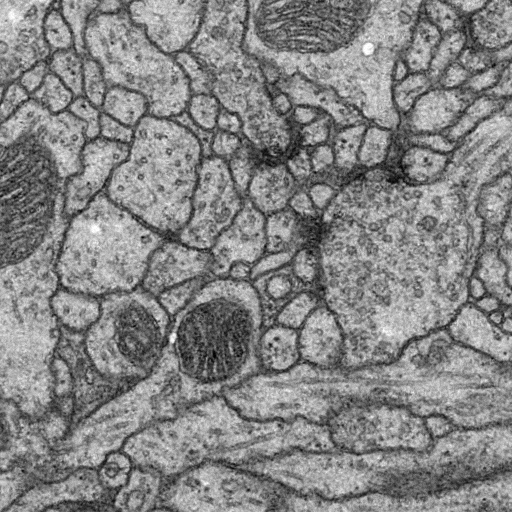

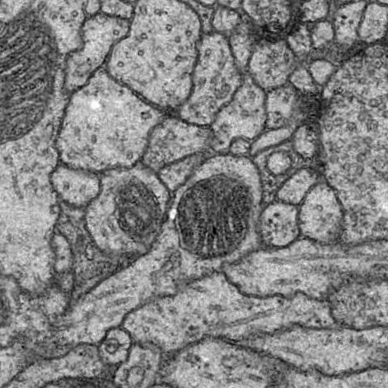

Result

Usage

When you download my code, your directory tree should consist of the following

keras-Unet/

├── data

├── test-volume.tif

├── train-labels.tif

└── train-volume.tif

├── images

├── jupyter.ipynb

├── augmentation.py

├── model.py

├── preprocessing.py

├── README.md

├── train.py

├── utills.py

└── requirement.txt

You can change the root directory of data to change the data_path in pre-processing.py and augmentation.py

However, at least three original competition data (test-volume, train-labels, train-volume) should put in the data_path

$ python3 augmentation.py

$ python3 preprocessing.py

$ python3 train.py

Easly to use my program just run augmentation-preprocessing-train step. You can get the prediction.tif for the result.

And you can just run the jupyter.ipynb with jupyter notebook to see how U-net works.

Leave a comment